Dear SingularityNET Community,

During 2024, SingularityNET made huge strides forward towards Artificial General Intelligence (AGI) and achieving our mission, laying the foundation to lead in the AGI era. We continued to transform our Foundation, innovate, move forward on our AGI R&D roadmap, build the decentralized infrastructure for the global economy, embrace open collaboration, and guide the development of neural-symbolic AGI in a beneficial direction.

We formed the Artificial Superintelligence (ASI) Alliance, the largest open-source, independent AI R&D initiative, free from corporate and governmental control. Through this collaboration between SingularityNET, Fetch.ai, Ocean Protocol and CUDOS, the Alliance provides an open, decentralized technology stack, for the research, development, and commercialization of artificial Intelligence (AI), offering a framework that will power the future of AGI and beyond.

We advanced the fundamental science of several critical technologies. We announced the Alpha release of the open-source OpenCog Hyperon AGI framework, scaled the interpretation and compilation of the MeTTa language for cognitive computations, introduced the MettaCycle Layer 0++ technology for secure, scalable, and performant decentralized computing, and accelerated the development of AGI-optimized software and hardware architectures.

R&D also introduced AIRIS (Autonomous Intelligent Reinforcement Inferred Symbolism), one of the first intelligent systems capable of autonomous, adaptive learning with real-world applicability. We deployed AIRIS in the Minecraft 3D gaming environment to help increase the generality of autonomous agents and the data efficiency of learning goal-directed behaviors.

Our Platform development efforts advanced the foundation for decentralized AI infrastructure, establishing comprehensive frameworks for next-generation AI services. Our teams delivered significant enhancements across the ecosystem, from sophisticated marketplace capabilities and training frameworks to advanced developer tools and streamlined onboarding systems. These developments, combined with multi-chain integration and enhanced security protocols, reinforce our vision of an accessible, decentralized AI ecosystem. This ecosystem will serve as the decentralized infrastructure for the global economy as the world enters the AGI era.

We invested in energy-efficient and sustainable hardware infrastructure to accelerate our AGI and ASI development. In June, we committed $53 million to establish the first modular supercomputer dedicated to decentralized AGI evolution, alongside state-of-the-art High-Performance Computing facilities. Our partnership with Tenstorrent further strengthens this foundation, enabling the development of specialized AGI-optimized chip architectures to advance our hardware capabilities.

Our ecosystem projects demonstrated their abilities to transform research into vertical and domain-specific solutions across decentralized finance, robotics, biotech and longevity, gaming and media, arts and entertainment, and enterprise-level AI. These advances are poised to multiply the impact of our AI technologies across society and businesses.

Recognizing that AI and AGI stewardship should not be confined within Big Tech or government walls, we conducted ethical reflection, launched programs and offered grants to aid and accelerate the growth of open-source decentralized initiatives, and fostered responsible AI practices across our ecosystem. In February 2024, we held the inaugural Beneficial AGI Summit in Panama to discuss how to guide AGI governance and leverage generally intelligent machines to support ethical, social, and economic progress.

Our perspective on AGI is clear and consistent: AGI needs to be in the hands of humanity at large — to be controlled by no one person or organization and enjoyed by everyone. That is why we will continue to provide practical solutions to help accelerate progress toward decentralized beneficial AGI. We will actively expand our involvement with wider stakeholders to mitigate the socio-ethical risks of frontier technologies, bridge the divides between the developed and developing world, and reconcile technological, economic, and social progress with environmental sustainability.

We enter 2025 more committed to building beneficial AGI and ASI than ever, more strategically focused, technologically capable, and positioned for massive acceleration and scaling.

Janet Adams, COO of SingularityNET

In 2024, we progressed the technical development of the Hyperon framework for AGI and beyond, transitioning from initial proof-of-concept and prototyping phases to establishing the framework as a functional software platform with defined product development trajectories.

In April, the Alpha release of the MeTTa cognitive language, a core component of Hyperon, marked a significant milestone in Hyperon’s development. This release coincided with the formation of an open-source community of Hyperon developers and MeTTa programmers.

To support community development, ongoing biweekly collaborative sessions (MeTTa Coders) continue to address two primary domains: technical discussions on MeTTa programming and strategic meetings focused on AGI development using the Hyperon framework. Through the Deep Funding (DF) initiative, we offered $1.25m in grants to foster this community, grow the adoption of MeTTa, and advance beneficial AGI R&D via the Hyperon framework.

At the 17th AGI Conference (AGI-24) in Seattle, a Hyperon Workshop offered participants hands-on experience with the MeTTa language, covering both its fundamentals and advanced application. The workshop also showcased a range of Hyperon applications, with presentations on PRIMUS, Neoterics, Mind Children, PLN/Pattern Matcher, Rejuve.Bio’s Neural-symbolic Hypothesis Generation, MeTTa-NARS, NACE, and more.

Following the Alpha release, the Hyperon framework advanced through several key development phases during 2024, pursuing AGI through an open, decentralized approach that leverages blockchain and distributed networks to foster collaboration and ensure equitable access to frontier technologies.

The Alpha release of the MeTTa interpreter prioritized usability, incorporating comprehensive documentation and enhanced accessibility features. The existing code documentation was expanded, and functionality for managing documentation directly within MeTTa was integrated. Tutorials were published on the metta-lang.dev web portal, along with an online interpreter that provides executable examples and a sandbox environment, enabling users to explore the language without requiring local installation.

MeTTa was published as a Hyperon package on PyPI, offering support to multiple platforms, including Apple silicon, and thereby simplifying installation for Python users. The implementation of the interpreter in Minimal MeTTa (a concise set of basic instructions used to describe the interpreter) was fully aligned with the initial ad hoc Rust implementation, leading to more simplified and stable semantics. The core subset of the standard library was refined to minimize potential backward compatibility issues, and the Alpha release included a module system to allow out-of-the-box usage of additional MeTTa libraries.

Following this release, development continued on these and other components, including the expansion of the stdlib functionality, publication of new tutorials, and a significant merger of the Rust-MeTTa and Minimal MeTTa interpreters. This merger yielded a 50x performance improvement for the Minimal MeTTa version. Another notable technical achievement was the implementation of universal access to Python modules and classes, allowing direct method calls from MeTTa without the need for additional wrappers.

These comprehensive enhancements significantly matured the interpreter, driving its advancement in conjunction with the development of other core Hyperon components.

The Integration of the Distributed Atomspace (DAS) with MeTTa was initiated around the time of the Alpha release. This integration necessitated the development of missing DAS functionality and a dedicated DAS adapter in MeTTa. This adapter, responsible for converting MeTTa code into DAS queries and interpreting the results back in MeTTa, marked the first module developed in MeTTa beyond the standard library.

Subsequent DAS development focused on implementing an innovative cache subsystem that integrates with the Query Engine API and AttentionBroker to set Short and Long Term Importance. This approach diverges from traditional caching by prioritizing the relevance of query results through sorting and partitioning, which offers significant advantages for AI agents conducting combinatorial searches. The implementation leveraged a multi-database approach, routing queries across backends like MongoDB and Redis to optimize performance and scalability. The successful deployment of the DAS on NuNet nodes by year end demonstrated its practical functionality within distributed computing environments.

The OpenCog Hyperon framework for AGI R&D supports multiple cognitive architectures including one-off question-answering chat systems, theorem provers, and even cognitive architectures designed to emulate aspects of the human mind. However, a particular cognitive architecture, namely CogPrime has remained central to the development of OpenCog Classic.

In 2024, this trajectory shifted with the design of PRIMUS, a next-generation architecture intended for implementation within Hyperon. PRIMUS builds upon the foundation of CogPrime, incorporating both the unique capabilities of Hyperon and the latest advancements in AGI and neural-symbolic AI.

PRIMUS will continue to incorporate subsystems for uncertain inference, including Probabilistic Logic Networks (PLN) and Non-Axiomatic Reasoning System (NARS), evolutionary methods such as MOSES, attention and resource allocation, motivation, goal generation, perception, action, concept formation, support tools, and more. Driven by curiosity, PRIMUS will actively experiment with and explore its environment, leveraging causal and temporal reasoning to construct and refine its internal world model.

While incorporating recent AI advancements (e.g., LLMs) to enhance its internal modules, the dynamics of PRIMUS will remain guided by the principle of cognitive synergy. This principle dictates that the cognitive processes within PRIMUS will collaborate rather than conflict. If one process encounters an obstacle, it can translate its intermediate state into a format understandable by other processes and solicit their assistance. This will result in an emergent intelligence that surpasses the capabilities of its individual parts, fostering continuous evolution.

PLN is a logic designed to emulate human-like common sense reasoning. This logic excels at handling uncertainty, empirical and abstract knowledge, and the relationships between them. While closely resembling Non-Axiomatic Logic (NAL), PLN is firmly grounded in probability theory. For example, the notion of uncertainty is captured using second-order probability distributions. This logic provides a common language that contributes to the cognitive synergy.

As an early test case for MeTTa implementation, PLN became the first cognitive component targeted for integration long before the Alpha release. This resulted in the exploration of MeTTa’s idiomatic constructions and revealed missing features. The porting process was approached from different angles: Proofs as match queries, Proofs as custom Atom structures, Proofs as programs, and properties as dependent types. Currently, the latter represents the most advanced approach.

Central to the dependent type approach is the implementation of a generic program synthesizer. Program synthesis experiments with uncurried backward chaining were handled. Furthermore, to implement a constructive version of the Pattern Miner, function calls within logical rules were introduced as a chainer extension.

Different versions of the forwards and backward chainers in MeTTa were prototyped and experimented with both using proofs as programs and proofs as program execution traces:

Inference control experiments including Curried Backward Chainer, Controlled Backward Chainer, Maximum Depth Controlled Backward Chainer, Reasoning-base Controlled Backward Chainer, and subtyping inference experiments were conducted.

A notable PLN application is mitigating LLM hallucinations. This is achieved by asking an LLM to translate natural language statements into the logical (PLN) representation, either directly or using Lojban as a proxy. PLN can verify the internal consistency of statements and fact-check them against a KG. A functional prototype of this system, demonstrating improvements in LLM question-answering through hallucination reduction, was presented during our technical Hyperon meetup in Brazil.

The ECAN implementation in MeTTa has been progressing through two parallel tasks: porting the previous C++ attention bank implementation into MeTTa and fixing a previous experimental implementation in C++.

MOSES is an evolutionary algorithm designed to evolve computer programs by optimizing them toward a specific goal or fitness function. MOSES uses a probabilistic modeling technique called Estimation of Distribution Algorithms (EDA) to build and refine populations of programs. It searches for optimal or near-optimal solutions by exploring the space of program structures in a more guided and efficient way, leveraging probabilistic models to predict and generate promising new program candidates. This makes it suitable for complex, symbolic tasks in the pursuit of AGI.

MOSES port from OpenCog Classic to MeTTa has started. SG-GP (Stochastic Grammar Genetic Programming) and SGE (Structured Grammar Evolution) implementations are in progress.

At the beginning of 2024, the port of NARS to MeTTa was enabled by the MeTTa-Morph extension, which compiles some computations in MeTTa using a Scheme compiler. This technical advancement facilitated NARS demonstration across multiple application domains.

The NARS+GPT project was ported from OpenNARS to MeTTa with the use of the MeTTa-Motto library for communicating with LLMs. Testing MeTTa-NARS+LLMs on a question-answering benchmark yielded promising results.

The Non Axiomatic Casual Explorer (NACE) navigational system was implemented with the use of MeTTa. “Explorer 1” design for NACE was introduced, resulting in the Integrated Hybrid System (NARCES) featuring semantic to sub-symbolic interconnection in NARS.

The experiment with Non-Axiomatic Logic truth values for Signal Temporal Logic formulas laid the groundwork for future research with Integrated Hybrid System to feature semantic to sub-symbolic interconnect.

The NARCES framework was integrated with the Robotic Operation System (ROS2), resulting in a live demonstration of robotic manipulator control within a simulated environment. This demonstration highlighted the framework’s ability to integrate complex cognitive processes.

The evolution of the MeTTa interpreter has enabled the development of key libraries, including the SingularityNET Platform software development kit (SDK) in MeTTa and MeTTa-Motto, laying the groundwork for a Hyperon-powered Knowledge Layer.

In 2024, the SingularityNET Platform SDK was released as a standard MeTTa module, distributed alongside the MeTTa interpreter. This provides comprehensive Platform functionality accessible through MeTTa programs, including listing organizations and services from the Platform registry, executing free calls, managing payment channels, and processing deposits for paid calls.

By automatically generating MeTTa functions for service calls and abstracting protobuf details, the MeTTa module streamlines Platform service calls to be as straightforward as invoking standard MeTTa functions. This capability enables service specification design in MeTTa and provides a pathway for utilizing these services as Hyperon plugins.

Concurrently, AI-DSL demonstrated significant progress in automatically identifying suitable services and composing them based on provided task specifications.

Our MeTTa-Motto library, initiated in late 2023 as a wrapper for calling ChatGPT from MeTTa, facilitates the integration of LLMs with MeTTa, allowing developers to leverage the capabilities of both technologies. This integration enables the development of AI applications that utilize natural language processing (NLP) and symbolic reasoning.

MeTTa-Motto recently expanded to include integrations with OpenRouter with proxy access to multiple LLMs and Anthropic API, LangChain infrastructure, vector embeddings for retrieval-augmented generation, and ontological systems like Wikidata and DBpedia.

The key technical distinction of MeTTa-Motto lies in its ability to bridge LLM interactions with MeTTa’s logical processing. This enables several capabilities: composing and chaining LLM calls using MeTTa scripts; processing LLM outputs as MeTTa expressions for further reasoning; using MeTTa’s symbolic knowledge to construct targeted prompts; managing stateful dialogues through specialized agents; and accessing external tools and data sources through LangChain integration.

A notable achievement was the implementation of native adapters for calling LLMs and KGs Platform services in MeTTa-Motto, using the SingularityNET SDK in MeTTa. This development paves the way for the Knowledge Layer on the Platform with access to KGs and LLMs from Hyperon within a unified framework.

Another significant trend in MeTTa-Motto’s evolution is the incubation of an agent framework. This framework is emerging as a standalone MeTTa library with multi-threaded event processing capabilities. Its design supports not only conversational agents with features like interruption handling and speech-canceling but also extends to robotic control.

The introduction of Hyperon as a platform for AGI R&D and practical applications highlighted the importance of ongoing efforts to enhance performance and scalability. Building upon the foundational work undertaken in 2024 across multiple complementary approaches, addressing the fundamental challenges of AGI scalability remains a primary focus for 2025.

These parallel development tracks embody the project’s open and decentralized nature and reflect the complex requirements of a language designed to serve as an integration point between diverse algorithmic paradigms, including evolutionary algorithms, symbolic logical inference, and neural systems.

In 2024, we initiated the development of a MeTTa-to-JVM (Jetta) compiler in Kotlin. The compiler is initially designed to cover a subset of the MeTTa language, including custom functions operating on grounded types such as numbers, strings, booleans, and others. Currently, the compiler supports the compilation of simply typed functional programs.

A compiler server and an adapter were implemented within the MeTTa interpreter via its application programming interface (API), enabling MeTTa programs to send specific code segments for compilation and subsequently utilize the compiled functions during interpretation. This approach has demonstrated performance improvements approaching a million-fold speedup for functions such as factorial calculations.

The compiler has been further enhanced to support lambda expressions and higher-order functions. This capability allows MeTTa programs to compile code generated at runtime, a crucial feature for speeding up genetic programming and other program synthesis methods.

The recent introduction of nondeterminism parameterized with map functions to Jetta represents a significant advancement, enabling more flexible and powerful computational capabilities. This is a core aspect of the MeTTa language given the nondeterministic nature of pattern matching.

With these advancements in performance optimization and its potential to function as a pluggable component within the MeTTa ecosystem, Jetta demonstrates considerable promise for future development and broader applications.

A parallel initiative, the MeTTa Optimal Reduction Kernel (MORK), focuses on an algebraic approach to composing Atomspace-wide operations. This implementation is based on a specialized, zipper-based, multi-threaded virtual machine, which provides native and highly scalable parallelism. By rearchitecting certain Hyperon bottlenecks, MORK has the potential to achieve performance improvements ranging from thousands to millions of times for key use cases.

Current results include a draft PLN implementation built on top of MORK and a demonstration of loading 1 billion MeTTa atoms representing a biomedical dataset. MORK also features a batched search for the closest hypervectors representing MeTTa atoms, which is currently a bottleneck under investigation.

Another parallel development track is MeTTaLog, which implements a MeTTa transpiler built on the Warren Abstract Machine in Prolog. This approach leverages Prolog’s efficient unification and inference engine. MeTTaLog boasts the highest compatibility with the MeTTa interpreter among the parallel efforts. This includes a functional PLN implementation within MeTTaLog and partially complete integration with MeTTa libraries. Furthermore, MeTTaLog demonstrates the capacity to load and query a billion atoms, exhibiting speedup in certain cases.

Recent demonstrations of MeTTaLog have shown promising performance improvements, particularly in concurrent execution and data structure optimization. These parallel development tracks, including Jetta, MORK, and MeTTaLog, continue to progress, each offering unique solutions to the challenges inherent in building a scalable AGI “language of thought.”

We deployed the AIRIS experiential learning system within the Minecraft 3D gaming environment, marking one of the first intelligent systems capable of autonomous, adaptive learning. This development will leverage Fetch.ai’s agent technologies, CUDOS’ compute infrastructure integration, and Ocean Protocol’s data frameworks for memory management, creating comprehensive individualized user experiences.

The system implementation demonstrates significant capabilities in dynamic navigation, obstacle adaptation, and efficient pathfinding protocols. AIRIS architecture enables real-time environmental adaptation without extensive retraining requirements, implementing dynamic rule generation through partial observation frameworks. The system’s functionality extends across complex terrain management including water bodies and cave systems, while maintaining computational efficiency for real-time rule application.

The development roadmap focuses on enhanced object interaction capabilities, multi-agent collaboration frameworks, and advanced reasoning protocols. System architecture aims to expand beyond navigation to include sophisticated decision-making frameworks for contextual tasks, social dynamics, and strategic problem-solving. These implementations establish foundations for real-world applications across autonomous robotics, smart assistance systems, and adaptive learning frameworks.

The AIRIS architecture consists of four primary components: the Rule Base for learned rules, the State Graph containing the world model of current and future predicted states, and Pre-Action and Post-Action Observation sequences. The system builds sets of causal rules from observations of environmental changes, enabling autonomous learning without pre-specified logical knowledge or encodings.

The system’s State Graph creation implements Focus Values compilation from state changes, reducing computational complexity through targeted attention mechanisms. The system evaluates rules through precondition match scoring (0:1) and priority queue management, with State Confidence metrics for prediction validation. This architecture enables generalization capabilities through partial state representation rather than complete state transitions.

Key achievements in testing environments demonstrate AIRIS’s efficient learning capabilities, with the system requiring significantly fewer training iterations than traditional reinforcement learning approaches. The system’s ability to adapt objectives without efficacy impact and transparent rule generation provides advantages for autonomous agent development. These capabilities position AIRIS as an innovative approach to causality-based AI, moving beyond traditional expert systems while maintaining scrutability and operational efficiency.

In 2024, we launched a $1.25M R&D grant program through our Deep Funding initiative to advance decentralized, benevolent and beneficial AGI. The program comprised 14 Requests For Proposals (RFPs) focused on advancing the OpenCog Hyperon framework for AGI at the human level and beyond, representing a strategic approach to open decentralized AI R&D.

The RFPs generated considerable global interest, with 124 proposals submitted from individuals and teams worldwide, highlighting the growing AI community engagement with our hybrid neural-symbolic approach to AGI R&D and vision of open decentralized AGI. The proposals covered a diverse range of research areas, including the use of LLMs for the Meta-Optimizing Semantic Evolutionary Search (MOSES) Evolutionary Algorithm, neural-symbolic deep neural network (DNN) architectures, clustering heuristics in the MeTTa programming language, AGI motivation systems, probabilistic logic network guidance, quantum computing, and AGI-specific hardware configurations.

The RFP evaluation process assessed each proposal’s technical strength, potential for integration, and contribution to our broader AGI strategy. Experts were assigned to RFPs based on their specific areas of expertise. After individual assessments were completed, all reviews were compiled and shared among the experts. Final reviews were conducted by teams of senior technologists, each composed of members with RFP-specific expertise. To make the process completely transparent, we have made all proposals and individual reviews publicly available.

Our grant program prioritized open-source development principles, requiring comprehensive documentation, public code repositories, and detailed technical reports. This approach created a robust collaborative platform, enabling researchers globally to advance cutting-edge AI technologies and reinforcing our commitment to open, decentralized, collaborative AGI R&D.

For details on the awarded projects, please see our official announcement blog.

During 2024, we advanced our decentralized AI Platform development, further augmenting our comprehensive infrastructure for AI service creation and deployment, focusing on decentralization, safety, scalability, and accessibility.

Early in the year, the Platform underwent foundational architectural refinements through environment synchronization between production and testing systems, expanded HTTP type services, and the integration of AI model training capability.

These developments established a robust decentralized AI infrastructure that aggregates multiple AI services on a single decentralized network, and functions as an end-to-end ecosystem of diverse, complex processes and interconnected components. Enhanced user interface architecture, optimized onboarding protocols, and comprehensive documentation frameworks support this infrastructure.

These core functionalities serve as the foundation, supplemented by ongoing R&D initiatives exploring the implementation of next-generation tools and third-party integrations.

The Developer Portal underwent significant technical advancement, transitioning to TypeScript and implementing dual-mode search architecture. The documentation was expanded to include comprehensive SDK integration guides, API specifications, and technical references.

The Portal advanced through improved navigation with section-specific routing, optimized resource loading, and enhanced mobile accessibility. The system integrated Vitepress on TypeScript, enabling new layout components in document content, structural refinements, and accelerated Portal development capabilities. Search functionality implementation enables user-selectable display modes, providing full text line presentation of search results across both document titles and content.

The Portal’s design incorporates comprehensive UI updates including theme-changing functionality with dual content interaction modes: single-branch mode for collapsing open subsections during navigation and all-branches mode for complete category subsection visibility. Additional features include collapsible content menus, user feedback form implementation for bug reporting and feature requests, expandable image viewing, and revised documentation.

Our decentralized AI Marketplace underwent continuous enhancement, including implementing a Data Preset for service fine-tuning. The Platform integrated advanced dataset analysis capabilities, visualization tools, and improved dataset management features.

Payment architecture improved through enhanced Metamask integration, with notification service implementation including field validation. System updates incorporated newer library versions, while Metamask connections now automatically request network or account changes. Payment systems underwent refinement to reduce the number of required signatures.

Training functionality received a complete overhaul, enabling user-driven model creation and service training on custom datasets. The system expanded with comprehensive dataset processing capabilities, including analysis, merging, cleaning, and optimization functions. Newly introduced updates included fixes for authorization, element and route display in the application, and updated validation for authorization forms.

The SDK ecosystem advanced through multi-language development. JavaScript SDK architecture underwent restructuring into Node.js, Web JS, and Common Core components, with new module implementations for payment strategy protocols, model management, and service interaction. System updates resolved transaction formation issues affecting Publisher and Marketplace operations.

Architecture refinements improved client service creation with predefined payment strategies, enabling selective free and paid AI service calls. System optimization included free call functionality fixes and codebase enhancements supporting training operations. Development focuses on writing tests, logging system updates, and Filecoin integration protocols.

The Platform expanded through Boilerplate application deployment, providing Marketplace-independent service access capabilities. This implementation delivers integrated UI frameworks for service calls, wallet connectivity, and FET (ASI) acquisition. Onramp integration in Boilerplate enhanced payment option accessibility for service utilization. Comprehensive documentation provides implementation guidance for Boilerplate deployment and custom UI development.

The Python SDK advanced through the integration of Training v2 protocols, network parameter configuration systems, expanded proto-compilation options, and enhanced test coverage frameworks. Development included automated PROTO file generation for Marketplace service calls and SDK-CLI dependency separation. Proto file management enables current service proto generation through force mode implementations.

Platform capabilities expanded to support Python 3.10 and 3.11, incorporating comprehensive refactoring and dependency updates. System enhancements delivered custom organization and service listing methods, simplified config setup protocols, and automated proto-file parsing for service method detection. Infrastructure improvements included detailed internal documentation frameworks and channel caching implementation for optimized paid service access. Development addressed security dependency protocols and introduced Filecoin functionality, with multiple releases deploying system improvements and refinements.

The Daemon component advanced from version 5.1.2 to 5.1.5, implementing Node.js dependency removal, dynamic SSL certificate update protocols, and Zap logging system integration. Logging performance was enhanced by a factor of 1,000 while reducing memory allocation by 10x. Additionally, embedded etcd logging received configurable management capabilities.

Blockchain functionality expanded through new contract listener implementation for organization metadata change detection and dynamic etcd endpoint reconnection. System updates included deprecated method removal and library updates for EVM and IPFS interactions. Feature implementation delivered configurable free call parameters and simplified configuration protocols for accelerated onboarding.

The Platform integrated custom error codes, enhanced training capabilities, and comprehensive Filecoin and Lighthouse support. Infrastructure development included unit test refactoring and new integration testing for gRPC request proxy methods between client and provider services. CI/CD framework underwent optimization for enhanced automation protocols.

Training V2 development delivered refined training methodologies and new architectural implementations. System updates incorporated a new “proto” file design with enhanced AI model communication protocols, with multiple releases deploying these capabilities throughout the development cycle.

The Publisher Portal advanced through automated UI protocols, enhanced daemon interaction frameworks, and comprehensive onboarding system integration. Platform accessibility expanded through new configuration tools and detailed documentation implementations.

Service and organization registration functionality restored core pipeline operations and introduced multi-organization management capabilities. System optimization included organization duplication verification and streamlined service registration pathways with integrated navigation protocols.

Infrastructure updates delivered enhanced organization service displays featuring pricing and daemon installation specifications. Platform expansion included rapid configuration generation through simplified field entry systems, integrated FAQ frameworks, and multi-format onboarding guidance. System refinements incorporated field validation protocols and optimized marketplace service assembly for accelerated deployment.

The Text User Interface (TUI) advanced through comprehensive design refinements, expanded functionality, and enhanced documentation. Notable developments included Python installation automation protocols, advanced navigation capabilities, and Filecoin integration. The Platform incorporates detailed parameter documentation and streamlined operational guidance for common scenarios.

The Platform Development team supported the Cardano Chang Hardfork integration, implementing comprehensive updates across Cardano node architectures, dbsync implementations, Cardano service protocols, bridge script frameworks, token transfer systems, and staking mechanisms to maintain blockchain compatibility standards.

The UI Sandbox represents a significant advancement in AI service interface development within the SingularityNET ecosystem. This sophisticated development environment addresses historical challenges in AI UI creation, enabling researchers and developers to focus on core algorithm development while ensuring effective user interaction capabilities.

The system architecture delivers comprehensive development tools including file management protocols, compilation systems, preview capabilities, environment variable management, and error-handling mechanisms. Core features include dedicated code development and result visualization areas, incorporating demo mode functionality, frontend compilation modules, and extensively documented element libraries. The Custom Components Library and enhanced project management tools streamline service development processes across the platform.

The UI Sandbox includes feedback systems, FAQ frameworks, and publisher-compatible frontend deployment with existing frontend modification support. The Sandbox implements rapid UI prototyping and deployment capabilities, reducing development time while accelerating AI service adoption. Current development focuses on template library architecture for zero-code frontend assembly, responding to community and developer feedback through systematic improvement cycles.

The Command Line Interface (CLI) underwent systematic enhancement through legacy argument removal, private key and mnemonic encryption protocols integration, and documentation expansion. System functionality advanced through channel caching optimization and comprehensive Filecoin Storage Provider integration.

Filecoin integration established complete file management capabilities through Lighthouse implementation, while enhanced system performance delivered through channel caching protocols reduced command execution times. Security frameworks expanded through private key and mnemonic phrase encryption, complemented by metadata and value validation systems. Other developments included automated documentation generation updates and functional testing implementation covering 30% of command protocols, with multiple releases deploying these fixes and capabilities.

As part of the DeepFunding initiative, we are developing technical requirements and designing the architecture for a comprehensive system centered around an escrow smart contract. This contract will facilitate milestone-based payments to project teams, ensuring secure and transparent fund management.

Key features include partner escrows, milestone-based payments with multi-signature approval, and flexible payment options in stablecoins or tokens. This system is designed to enhance operational efficiency, promote financial security, and foster trust. We are also updating the Platform’s MPE contract to add new functions, such as adding multiple users to one channel and working on subscription model mechanics.

The revamped Voting Portal introduces enhanced functionality through a tabbed interface, enabling voters to monitor realtime voting progress and verify their voting power. Transparency improvements include visible vote signatures and robust validation mechanisms, with downloadable voting results and balance snapshot data. The system offers comprehensive wallet integration spanning Ethereum, Binance, and Cardano networks, supporting software and hardware wallet connections.

The Token Bridge introduces a modernized interface incorporating GameFi design elements, providing users with positive step completion feedback. It integrates TradingView rate data visualization, conversion history filtering capabilities, and enhanced wallet connection architecture. User experience improvements include guided navigation elements and upfront fee calculations.

The Bridge infrastructure expanded with support for ASI (FET) and WMTx tokens, enabling bi-directional transfers between Ethereum and Cardano networks. Implemented functionality includes ASI cross-chain transfers, native AGIX to ASI migration on Cardano, and WMTx inter-chain conversions. The system incorporates comprehensive token management features including locked tokens conversion support, liquidity pool transfers, fee collection protocols, and conversion ratio frameworks.

As part of the first phase of migrating payment channels to the Cardano blockchain, we prepared a Request for Proposal (RFP) for adapting the MultiParty Escrow (MPE) smart contract. The goal is to move the existing Ethereum-based MPE functionality to Cardano, using its Extended UTxO model. This will enable secure and decentralized transactions between SingularityNET clients and AI service providers. The RFP details how the payment channels will work, including features like token deposits, channel claims, expiration management, and extensions. The implementation will follow Cardano’s UTxO architecture while ensuring the system is scalable, secure, and fits into SingularityNET’s marketplace.

Concurrently, we have been enhancing Cardano support on the Platform, notably SingularityNET staking. Due to the recent Chang hard fork, this required updates to the Cardano Transaction Library (CTL). Updates remain in development to align with new system specifications with upcoming milestones including the finalization of the MPE smart contract and the completion of the new staking system.

For the migration from Cardano Native Token (CNT) AGIX to CNT FET (ASI), essential bridge mechanics for tokens with shared ownership were implemented. This includes a shared ownership token contract and liquidity contract for custodial token transfers, a secure multi-signature approach with external transaction signer services, and the ability to create conversions with a given conversion ratio.

These developments, along with continuous refinements in dataset preprocessing, training model management, and blockchain integration, have significantly advanced our Platform’s capabilities, establishing a robust foundation for future innovations.

The SingularityNET AI Music Lab has advanced significantly in developing an AI-powered music generation system, completing initial development phases to establish foundations for innovative music creation tools.

The initiative began with a comprehensive data acquisition phase, accumulating 170,000 hours of audio content to serve as the foundational training dataset for AI model development. The data infrastructure expanded through systematic metadata extraction, implementing mood and genre classification systems, live audio identification protocols, and NLP-based metadata analysis frameworks.

The development advanced through a comprehensive evaluation of music analysis tools, conducting systematic testing of vocal separation capabilities, structure analysis systems, and metadata assessment frameworks. The Platform implemented defined testing criteria and benchmarking protocols using validated sample datasets. Research initiatives focused on model parameter optimization to enhance generation quality and user control mechanisms.

Current development centers on pilot training and evaluation protocols, establishing foundations for small-scale generation models through baseline system implementation, audio preprocessing frameworks, and checkpoint validation using targeted data subsets.

The technical expansion will focus on medium-scale production scaling, including complete data processing implementation, genre-based classification systems, and robust user checkpoint validation. The model will be released with comprehensive documentation and deployment frameworks.

Parallel development continues in music stem separation technologies, advancing capabilities for vocal and instrumental isolation to enhance dataset quality and support downstream music analysis and generation tasks.

Our AI NLP Lab has made significant progress in LLM research, Generative Language Model (GLM) service development, and the creation of sophisticated dialogue systems.

The Lab’s LLM research direction has focused on fine-tuning and optimizing LLMs for various applications. The team has explored distributed fine-tuning techniques, including Tensor Parallel and Pipeline Parallel with LoRA, to enhance model training efficiency and performance. Additionally, they have investigated methods for utilizing low-resource hardware for large model training, making powerful AI capabilities more accessible.

As for GLM service development, the Lab has designed and deployed services on the SingularityNET AI Marketplace. The team has selected and optimized models and released two versions of the GLM service, each offering enhanced capabilities and performance.

Furthermore, the Lab has successfully trained an Arabic-English translation model, demonstrating its expertise in cross-lingual language processing. The team collected and prepared data, set up a robust cluster environment, and trained the model, achieving significant results.

The AI NLP Lab has also tested cutting-edge hardware, including Tenstorrent T3000. Through this rigorous testing and benchmarking, the team has evaluated the hardware’s capabilities for running LLMs, providing valuable insights for optimizing AI inference.

The Lab has also made strides in developing Fine-tuning as a Service (FTaaS), enabling users to customize language models for specific tasks and domains. The team has designed and implemented the core service architecture, developed a proof-of-concept LLM service, and created an instruction showcase to demonstrate the capabilities of FTaaS.

One of the Lab’s notable achievements is the development of the TWIN dialogue system, an AI-powered system capable of engaging in human-like conversations. The team has conducted extensive R&D, fine-tuning models on various datasets, incorporating advanced techniques such as graph traversal and style modeling, and optimizing the system for high-speed performance. The TWIN system has been successfully deployed in production and is being integrated with various applications.

The AI NLP Lab has also contributed to the development of the SingularityNET Assistant, an AI-powered assistant that provides information and support to users of the SingularityNET Platform. The team has explored various approaches to improve the assistant’s capabilities, including the MeTTa approach, and has developed and released a new version of the system with enhanced features.

The team has also upgraded its previous project in the Rejuve Automated Insights Generator, contributing to system improvement, prototype development, and the generation of new insights.

Finally, the AI NLP Lab has actively contributed to the advancement of the SophiaVerse project, designing the overall system architecture and developing various modules, including the FreeForm Input module, Dialog Core logic, and Semi-Freeform Output module. The team has also researched different embedding systems and developed an aligned output module for the FreeForm Input and a FreeForm Output module prototype development.

Deep Funding is a community-driven program that has been created to support our mission of developing decentralized, democratic, inclusive, beneficial AGI. The main goal of DF is to support the growth of our decentralized AI Platform.

The implementation of a custom-built DF portal in 2024 enabled transition from third-party platform dependency to a comprehensive RFP-based funding program. With 15 RFPs currently in progress, the platform demonstrates significant expansion of funding capabilities through its new infrastructure.

Technical development advanced through the launch of community.deepfunding.ai reflecting the growing maturity of the Circle-based organizational structure. Community-driven initiatives marked key achievements, including the Developer Outreach Circle’s first grassroots-developed hackathon deployment.

The January 2025 roadmap includes comprehensive platform updates: homepage redesign, RFP category page implementation, Community Hubs initiative launch for community growth and diversification, DFR5 alignment with ASI: Create platform development, and RFP-Create flow design presentation for community-driven ideation.

Key developments through 2025 will deliver RFP-Create flow implementation with ideation campaigns, Open Challenges funding initiative, expanded hackathon capabilities, and enhanced community website features. Technical improvements include Single Sign On (SSO) integration connecting the community site with the portal and dedicated Mattermost environment, alongside advanced portal features with reputation system integration through richer profiles. Backend infrastructure will advance through operations.deepfunding.ai automation processes, while partnership frameworks expand for joint RFPs and Pools.

Additional platform developments and features will emerge through community engagement and technical advancement. This systematic development approach establishes a robust infrastructure for continued growth in decentralized funding initiatives while maintaining a focus on community engagement and technical advancement.

Visit deepfunding.ai, the DF Telegram channel, or LinkedIn page to explore funding rounds, participation opportunities, and active projects shaping the SingularityNET decentralized AI Platform.

For the year, we laid the foundation for a decentralized AGI supercomputing ecosystem, focused on scalability, adaptability, and high-performance AI processing. Key initiatives included developing strategic infrastructure, forging of global partnerships, and optimizing AI workloads to support AGI applications at scale.

We launched a global strategy focused on the rapid expansion of a network of AGI supercomputing facilities. These facilities feature hardware configurations designed to surpass traditional GPU clusters, offering optimal efficiency for task-specific processing units, federated learning, and neural-symbolic AI running on Hyperon. By enabling continual learning, scalable inference, and hybrid AI architectures, these systems are laying the groundwork for AGI powered by next-generation compute infrastructure.

We adopted a multi-location compute strategy to ensure global scalability and redundancy. This network will leverage ecosystem partners for decentralized AI resource sharing and blockchain-based compute distribution. This approach supports secure, cost-efficient execution of AGI workloads.

We entered a strategic partnership with Tenstorrent, a leader in high-performance computing architecture, to co-design and integrate next-generation AGI-optimized chips into our compute ecosystem, enabling higher efficiency in model training and inference. Through a structured, three-phase strategy, this collaboration facilitates more effective scaling of hybrid AI workloads and reduces power consumption, further advancing our vision for a decentralized, high-performance AGI compute network:

The integration of CUDOS into the ASI Alliance has significantly expanded our cloud AI capabilities, enabling the seamless deployment and management of AGI workloads across bare metal, cloud, and decentralized infrastructures. CUDOS’s network provides enhanced scalability and access to high-performance distributed AI hosting, supporting a diverse range of research and enterprise applications. Compared to traditional centralized approaches, the CUDOS cloud model delivers substantial benefits, including improved scalability, greater cost-efficiency, and increased flexibility. Notably, CUDOS enables access to premium AI infrastructure, such as NVIDIA H100 GPUs, at approximately half the cost of Amazon Web Services (AWS).

As a key infrastructure partner for Modular Data Center (MDC) solutions development, Ecoblox ensures the delivery of scalable and adaptable AGI compute solutions. These MDCs will offer flexible, high-performance AI infrastructure tailored for hybrid AI workloads, neural-symbolic processing, and future quantum integrations.

In December 2024, we established a partnership to launch a $24M initiative to install AGI-optimized supercomputing configurations in a state-of-the-art facility in Sweden, marking it as the initial deployment site. This MDC will support large-scale training, inference, and research, powered by NVIDIA L40S GPUs, AMD Instinct processors, and Tenstorrent NPUs with high-speed networking and ethical AI governance compliance.

Further deployments are planned across the globe and we are already exploring locations throughout the Middle East. The combined expertise of Ecoblox and CUDOS in decentralized compute and AI infrastructure is instrumental in driving this global rollout.

In 2025, a primary focus will be the operational launch of our first MDC in Sweden. In collaboration with Ecoblox, CUDOS, Tenstorrent, Nvidia, AMD, GIGABYTE, ASUS, and Cisco, we will energize this facility with H200 and L40 server racks, establishing it as the first operational AGI compute hub and a blueprint for future expansions across the globe.

Building on this initial deployment, we will collaborate with governments and private sector leaders to establish fund-matched AGI compute centers, fostering global decentralization and accessibility for AGI workloads. In preparation for this global AGI infrastructure expansion, we are actively hiring AI leaders, AGI infrastructure engineers, cloud security experts, and compute optimization specialists to support our MDC rollouts and research initiatives.

To ensure responsible development and deployment, we are also complying with evolving global AI regulations and aligning with global data privacy and security standards. Furthermore, we are engaging renewable energy providers and sustainable data center operators to build low-carbon AGI infrastructure, supporting energy-efficient supercomputing locations.

Concurrently with these infrastructural developments, our technical efforts will be directed toward building robust infrastructure for AGI R&D, developing a scalable compute ecosystem that integrates factual databases, modular AI architectures, and hybrid AI processing for enhanced efficiency and interpretability. We will also align with the industry’s shift toward inference-heavy AI workloads, optimizing our AGI compute to address increasing inference demand, reduce training costs, and ensure low-latency, scalable deployment of AGI models.

Finally, a key initiative in 2025 will be the introduction of a tiered compute service model, developed as part of our global compute strategy. This model will offer services tailored to the needs of diverse stakeholders, ranging from small-scale AGI research to enterprise-grade deployments. Service tiers will include Compute Infrastructure, AI-as-a-Service (AIaaS), Platform-as-a-Service (PaaS), Hyperon nodes, and consulting services.

At SingularityNET, we approach AGI safety, security, and ethics with rigor and depth across diverse technical and philosophical perspectives. We view the development of safe, ethical, and trustworthy AGI as dependent on establishing safe, ethical, and trustworthy technology, data, practices, and design considerations.

Such AGI systems require functionally reliable technology. At minimum, this includes “algorithmic integrity” which can handle data errors, a mechanism to adapt and learn from logic errors, and outputs that human users can inherently trust. The system also requires secure, immutable, authenticated, ecosystem-wide data models that minimize bias to the greatest practical extent.

While necessary, reliable algorithms and data alone do not ensure safe, ethical, and trustworthy AGI. Any AGI system will ultimately reflect the combined ideas and visions of the development team members tasked with building it, with all their inherent biases, inspirations, and ethical strengths and weaknesses. This is why we continue building out a team whose members have developed deep mutual trust in each other’s capabilities and ethical judgment.

We work to clearly understand and acknowledge our own perspectives and biases, sharing these perspectives among our team and larger community, and building shared consensus through civil, open, and deep discussions from as diverse an array of cultural viewpoints as practical. Current development focuses on decentralizing R&D across a broader and more diverse community, engaging in the design, development and deployment of a reliable and comprehensive reputation system.

We also actively engage with diverse safety approaches through our involvement in the OpenAIS (Artificial Immune Systems) community. AIS represent a paradigm shift in AGI security — they are proactive rather than reactive, adapting and responding in real time to evolving threats. While these biomimetic security approaches provide valuable cybersecurity mechanisms, we recognize them as necessary but insufficient components of comprehensive AGI safety.

In terms of designing and implementing safe AGI, we draw insights from complex systems in physics. Just as we can understand gas dynamics through both molecular interactions and macroscopic properties like pressure and temperature, we believe AGI systems need to be developed in a modular fashion with a clear understanding across different scales of operation.

This perspective shapes our view of current transformer-based approaches that attempt to bridge neural computation to human-level understanding through massive parameter optimization. While these systems implement safety through data filtering and feedback loops, we see fundamental challenges in ensuring beneficial outcomes through parameter setting alone — essentially trying to make this leap in one step.

One paradox we have identified in current AI system designs is the prevalence of unencrypted training data paired with opaque models. We advocate for the inverse: building on encrypted data foundations while maintaining model transparency. This includes implementing comprehensive security solutions including Fully Homomorphic Encryption (FHE), noise-based computing, and advanced data lineage tracking, all in collaboration with our partner, PureCipher, and Secure Multi-Party Computation (SMPC). These technologies work together to create resilient, self-learning systems for data protection — crucial as AI systems become increasingly attractive targets for cybersecurity threats.

Our approach emphasizes the importance of data integrity from the outset. As quantum computing advances rapidly, the need for quantum-safe encryption and robust data verification becomes increasingly urgent. Initial data used for model building must be verified, cleansed, tracked for potential tampering, and protected with quantum-safe encryption methods.

By drawing insights from well-understood physical systems while implementing cutting-edge security measures, we are working toward a framework for AGI safety that transcends parameter optimization or singular security measures. Our focus on multi-scale understanding and system transparency represents a practical approach to ensuring beneficial AGI development — one that acknowledges the complexity of the challenge while providing clear principles for addressing it.

The BGI Nexus launched in 2024 as a key initiative under the BGI Collective, establishing new frameworks for ethical AI development. With support from SingularityNET and Humanity+, the initiative implements coordinated approaches to collective intelligence to develop ethical frameworks and governance structures in AI advancement. BGI Nexus has established a structured methodology for guiding AGI development to benefit humanity and other sentient beings.

Throughout 2024, BGI Nexus conducted 43 Fishbowl Interviews at the BGI24 Summit and AGI-24 Technical Conference, gathering global ethical perspectives on AI and AGI from thought leaders and attendees. The Pre-AGI-24 virtual sessions, led and facilitated by SingularityNET Ambassadors, engaged 53 participants in discussions on AI-Human Collaboration, Anthropomorphism and AI, and Indigenous Protocol on AI, while Virtual Conference activities included networking sessions and AI ethics interviews.

Team members participated in key events including the “Ubuntu and AI” collaboration between the Ambassador Program’s African Guild and AI Ethics Workgroup in Nigeria, where LeeLoo Rose examined Africa’s role in emergent technology and Ubuntu philosophy in AI development, while Esther Galfalvi discussed AI governance and ethics initiatives. The BGI Nexus presented at both the Superintelligence Summit in Thailand and the joint SingularityNET-EARTHwise event for Elowyn game’s Solstice Pre-Alpha launch.

The BGI Nexus Book Club has engaged 75 participants across four sessions discussing “The Coming Consciousness Explosion” by Dr. Ben Goertzel and Dr. Gabriel Axel Montes, with ongoing discussions continuing in the Telegram AGI Bookclub channel.

Looking toward 2025, development focuses on the BGI Global Brain Challenge, implementing Virtual Roundtable and RFP Clinic sessions, alongside community engagement activities building toward BGI-25. Technical development includes website enhancement for community engagement, AI chat system deployment for processing community perspectives, and integration of donation mechanisms supporting BGI Nexus projects through DF pools and Community RFPs.

In line with the Foundation’s commitment to decentralization and democratic values, we advanced our operational framework through strategic relocation from Amsterdam, Netherlands to Zug, Switzerland’s Crypto Valley. The transition enables enhanced alignment with Web3 regulatory frameworks while advancing the platform’s core mission of developing beneficial AGI through decentralized, democratic, and inclusive protocols.

The Swiss implementation provides significant advantages through its established crypto-friendly environment and comprehensive regulatory clarity. Zug’s blockchain ecosystem, supporting over 1,600 projects including major platforms like Cardano, Ethereum, Polkadot, and Tezos, provides an ideal environment for advancing both blockchain technology and AI innovation. This environment enables enhanced collaboration opportunities with Web3 partners while supporting the development of new use cases and expanded research initiatives.

Under Dr. Ben Goertzel’s leadership, our Swiss presence will advance ecosystem growth through expanded community development and research programs. The relocation establishes foundations for accelerated progress within a supportive, innovation-friendly setting aligned with the Platform’s vision for democratic and decentralized AI development.

As part of our global expansion strategy, we are pleased to announce the formation of a new subsidiary entity in London, United Kingdom, in 2024. The establishment of the UK business marks an important milestone in growing our operations and presence in Europe.

The decision to expand into the UK was driven by the country’s position as a global leader in AI research, innovation, and commercialization. The UK government has demonstrated a strong commitment to supporting the growth of its AI sector through a range of policy initiatives and investments. The recently unveiled AI Opportunities Action Plan further underscores the nation’s ambition to harness the transformative potential of AI to drive economic growth, boost productivity, and cement its position as an AI superpower.

The AI Opportunities Action Plan outlines a comprehensive approach to developing the UK’s AI ecosystem, focusing on key areas such as infrastructure and talent development, widespread AI adoption, and realizing the technology’s economic impact. The Plan’s focus on creating a supportive environment for AI startups and scaleups, facilitating access to critical resources, and driving productivity gains aligns closely with our mission and growth objectives.

The UK business is well-positioned to capitalize on the opportunities presented by the AI Opportunities Action Plan. Our local presence will enable us to actively contribute to and benefit from the initiatives outlined in the Plan, collaborate with key stakeholders across the AI ecosystem, and play a role in shaping the future of AI in the UK and Europe.

The UK’s thriving AI ecosystem, which includes world-renowned universities, innovative startups, and major technology companies, provides an ideal environment for us to collaborate, innovate, and scale our operations. Our UK company will serve as the focal point for engaging with policymakers, partners, and the broader AI community across the region.

Leading our UK operations is Amal Moussaoui, a seasoned diplomat with extensive experience in negotiating international science and technology agreements with the UK and Europe. She has also worked closely with leading scientists and policymakers in AI and quantum to help address challenges in health, the environment and societal goals.

Through our new local presence, we will be better positioned to contribute to the UK’s AI ecosystem, forge partnerships with key stakeholders, and drive the adoption of our AI Platform and domain-specific solutions across the region. We look forward to working closely with the UK AI community to continue to learn from a wide range of diverse perspectives, advance the responsible development and deployment of AI technologies, and create value for businesses and society at large.

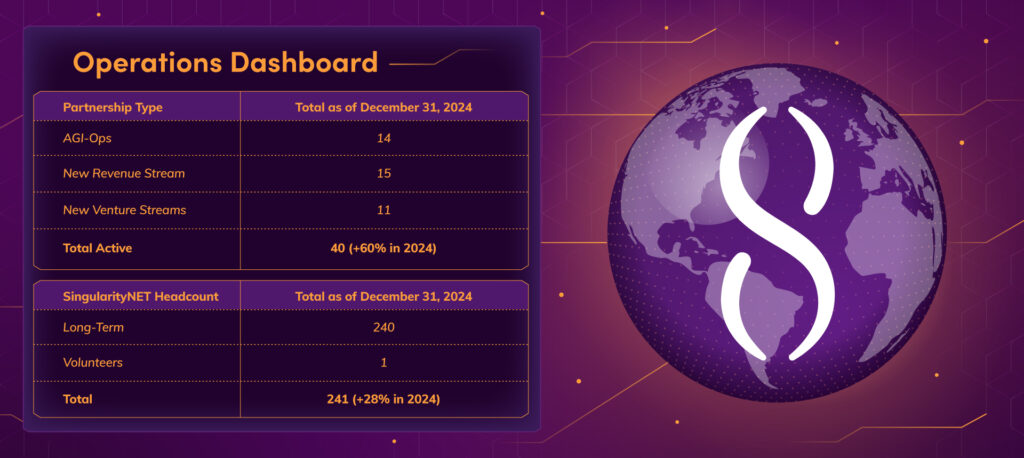

We advanced our organizational development in 2024 through systematic initiatives focused on operational excellence and ecosystem growth. These initiatives included streamlined administrative processes, enhanced contract management systems, and cross-functional data optimization across HR and Finance operations. We also took steps to advance our environmental leadership beyond AI models and infrastructure to operational practices, with implemented carbon offset programs for business travel and other initiatives.

Recognizing the transformative impact of AI and other advanced technologies on operational practices, we implemented enhanced data automation across administrative functions to improve efficiency and decision-making capabilities. Our established operational foundation and the strong performance of our projects have positioned us for strategic team expansion in the first half of 2025. This planned growth reflects our commitment to expanding our impact.

The HR team played a critical role in this transformation, supporting operations through strategic communication frameworks, targeted talent retention initiatives, and systematic approaches to contractor management. As important, we remain committed to fostering a culture in which all team members feel they can bring their best selves to work. This commitment is reflected in our organizational design, which creates meaningful opportunities for individual expression and professional contribution. Through inclusive frameworks that support diverse talents and perspectives, we enable team members to fully leverage their unique capabilities within the Foundation and our broader ecosystem.

As we continue to expand our team, our recruitment frameworks continue to prioritize candidates with SingularityNET ecosystem experience in technical and operational roles, valuing deep understanding of our technical infrastructure and mission alignment. Current open positions are available across technical and operational domains, including a Software Application Engineer, Risk and Controls Manager, Value Engineer, Events Coordinator, Operations Assistant and more. Interested candidates are invited to explore our current opportunities through our careers page, LinkedIn company page, or send their resumes for open candidature to recruitment@singularitynet.io.

In 2024, our Strategic Initiatives Office (SIO) continued to advance SingularityNET’s position in the decentralized AI space, with a focused approach to commercialization, revenue modeling, and resource orchestration. Strategic exploration concentrated on emerging technological domains, including decentralized AI infrastructure, cross-chain interoperability, and advanced computational resource optimization.

Our value engineering team, led by Strategic Initiatives Officer, Alex Blagirev, developed comprehensive frameworks targeting emerging technological domains and market segments. These frameworks enabled a systematic approach to technological innovation, creating strategic value through targeted initiatives and specialized solution development.

The year marked significant achievements in technical integration, notably completing Phase 1 of the Filecoin integration through Lighthouse SDK, which generated 36,000 impressions and leveraged Filecoin’s 667,000-follower ecosystem. The development of the World Mobile Bridge between Cardano and Ethereum demonstrated our capability to create reusable cross-chain infrastructure.

A key development milestone included delivering an MVP of the AI-powered BIM RAG system for AL’MA Action Logement, where an AI conversational agent provides answers based on a domain-specific knowledge base of Building Information Modeling (BIM) and materials’ carbon footprint documentation. This supports Europe’s leading affordable housing provider in managing 1.2 million housing units and processing over 790,000 employee services.

Strategic partnerships expanded our reach, notably through the BNB Chain collaboration announcement reaching 3.4 million followers. Infrastructure strengthening continued through partnerships with key validators including BitGo, Kilm, and Figment. The collaboration with Tenstorrent focused on optimizing AI hardware capabilities, while the OriginTrail partnership advanced DKG Explorer integration and explored MeTTa language integration. The ASI Merger progressed through streamlined application processes and successful coordination with Ocean Protocol and Fetch.ai teams for the CUDOS Merger.

Developer ecosystem growth accelerated through strategic initiatives, including co-hosting the Copa América Hackathon AI track, touted as Latin America’s biggest startup competition with a $1m prize pool and seed funding, in collaboration with the Solana Foundation, Superteam India, and LATAM. Strategic engagements included meetings with top 50 Key Opinion Leaders (KOLs) at the Binance Campus in Panama, establishing direct connections with representatives from Solana Foundation, Avalanche, and BNB LATAM.

The SIO team maintained a strong industry presence through participation in key events including India Blockchain Week, EthCC Brussels, DevCon Bangkok, DKGCon Amsterdam, and Unfold24. We conducted presentations at AL’MA Action Logement headquarters in Paris in June and December, with AL’MA Action Logement subsequently participating in the SingularityNET Ecosystem Bangkok event. The partnership with Humanity 2.0 expanded institutional reach, securing representation at the Pontifical Academy of Sciences (Vatican) as an official delegate and membership in their AI Ethics committee.

Looking toward 2025, our SIO has outlined an extensive development roadmap across multiple sectors. Near-term objectives include completing Filecoin’s second phase integration by January 2025 and implementing a comprehensive hackathon and community-building program. The Solana collaboration will expand through DF proposals for projects using MettaCycle and AI Agents for the Stablecoin strategy. BNB Smart Chain development will focus on Greenfield integration for advanced data storage and DeFi optimization. A new partnership with TRON will integrate the BitTorrent network, enhancing decentralized storage capabilities and AI-based data management.

The DeFi ecosystem will see significant expansion through targeted collaborations with PancakeSwap and Cheesecake, developing AI-enhanced tools for improved functionality and user experience. An upcoming ASI Alliance initiative will focus on providing the DevRel community with learning tools and resources to deepen their understanding of the ASI innovation stack. The Mina Protocol partnership will advance through three phases: zKML development, AI Agents for Governance privacy integration, and joint fund pool establishment. HAQQ collaboration will continue developing ethical blockchain frameworks for AI governance tools, emphasizing sustainable innovation in community development.

These strategic initiatives, alongside other partnerships and collaborations to be announced, will advance our leadership in the decentralized AI space through 2025, with a clear focus on ecosystem expansion, technological innovation, and community growth.

Our marketing team executed a strategic plan to solidify SingularityNET and the ASI Alliance’s position within the decentralized AI and AGI spaces. In response to growing global interest in AI, the team focused on communicating our shared commitment to beneficial AGI through open-source and decentralized methodologies. This involved enhancing visibility and engagement with key stakeholders, including researchers, developers, policymakers, Web3 and AI enthusiasts, and the broader technology community.

The team utilized a structured approach encompassing brand positioning, media relations, and community engagement. Brand positioning activities articulated our core value proposition: a framework for AGI at the human level and beyond, built on a decentralized AI platform designed for secure, efficient, user-friendly, and fully decentralized operation, free from central ownership or control. This involved developing consistent messaging that highlighted our technological capabilities and commitment to decentralization.

Media relations concentrated on securing coverage in relevant technology publications, industry journals, and online platforms. This proactive approach aimed to generate informed discussion, increase brand awareness, and establish SingularityNET as a key player in the AGI race and a leading voice and contributor to the decentralized AI space. Media outlet selection was based on their reach within target audience segments.

In addition to targeted media relations, the team ensured comprehensive event coverage. Keynote presentations and other significant event activities were documented and subsequently communicated to the community through various channels, including social media and monthly and quarterly blogs. This approach aimed to enhance transparency and ensure accessibility of information related to our R&D efforts.

Community engagement activities were implemented to foster interaction with various stakeholder groups, recognizing the importance of building a robust and active community around the SingularityNET and ASI Alliance ecosystem. Content creation, including technical documentation, blog posts, and social media updates, supported this effort. Content was structured to address the specific interests of diverse audiences, from technical researchers and developers to business professionals and technology observers.

Our strategic community engagement initiatives focused on expanding reach across key social media platforms, notably LinkedIn and X. On LinkedIn, content highlighting technology expertise, advancements, industry events, and advocacy for beneficial AGI drove significant audience growth, with a 47.04% increase in followers within our target demographic of technology professionals and developers. On X, a targeted content approach, including participation in global AI and AGI discussions, reinforced our presence in the decentralized AI space and contributed to a 20.52% increase in followers.

A key differentiator for SingularityNET is our OpenCog Hyperon-based hybrid neural-symbolic approach to AGI development, which contrasts with the LLM-based approaches prevalent among many large technology corporations, startups, and research laboratories. This distinction was a core component of the 2024 communications strategy, highlighting our unique technological direction and advances throughout the year.

To further R&D aligned with this approach, the SingularityNET Foundation established a grant program, allocating $1.25 million through our DF initiative to support individuals and teams focused on beneficial AGI R&D. The marketing team supported this initiative through targeted campaigns (public relations outreach to relevant media outlets, social media engagement, email communications, event promotion with flier distribution, etc.). This campaign generated significant interest, resulting in 124 submitted proposals and contributing to the success of this inaugural beneficial AGI R&D grant program.

For the year, our community Ambassadors continued to strengthen the transparency and decentralization of the SingularityNET ecosystem, with Core Contributor membership expanding from 31 to 53 members.

The Ambassador program implemented governance rights for 53 contributors based on six-month activity metrics, achieved the first consensus-based governance decisions for Q1 workgroup budgets, and established refined procedures for budget proposals and approvals.

Technical infrastructure development progressed through R&D Guild initiatives, including the implementation of a Proposal Submission System, Consensus Dashboard, Reputation System, and Contributor Portal. The Treasury Automation Workgroup advanced participation metrics and Zoom API integration, while the Gamers Guild established Roblox environment testing in collaboration with SophiaVerse dialogue systems. The Education Guild launched a Certification Program enabling Core Contributors to validate expertise in our decentralized AI ecosystem.

Regional engagement expanded through coordinated Guild activities. The LATAM Guild participated in the Aleph Pop Up City event and Solana Copa America Hackathon, increasing ASI Alliance visibility in Mexico and Argentina. The African Guild organized the program’s first in-person event in Abuja and hosted sessions on AI Solutions from African perspectives. The AI Ethics Workgroup contributed to AGI-24 Conference activities, leading sessions on AI anthropomorphization, Indigenous approaches to AI, and AI-human collaboration.

Program milestones included the 100th Town Hall meeting, successful DF proposal approvals for four core contributors, and Ambassador representation at key events including sponsorship of five Ambassadors to the Beneficial AGI Summit and representation at the Bangkok Ecosystem Festival. Operational improvements implemented monthly Workgroup synchronization protocols and refined quarterly budgeting processes through Loomio and Swarm AI tools for decentralized decision-making. The Marketing Guild advanced community engagement through targeted Zealy campaign initiatives while the Strategy Guild reactivated to drive program-wide implementation.

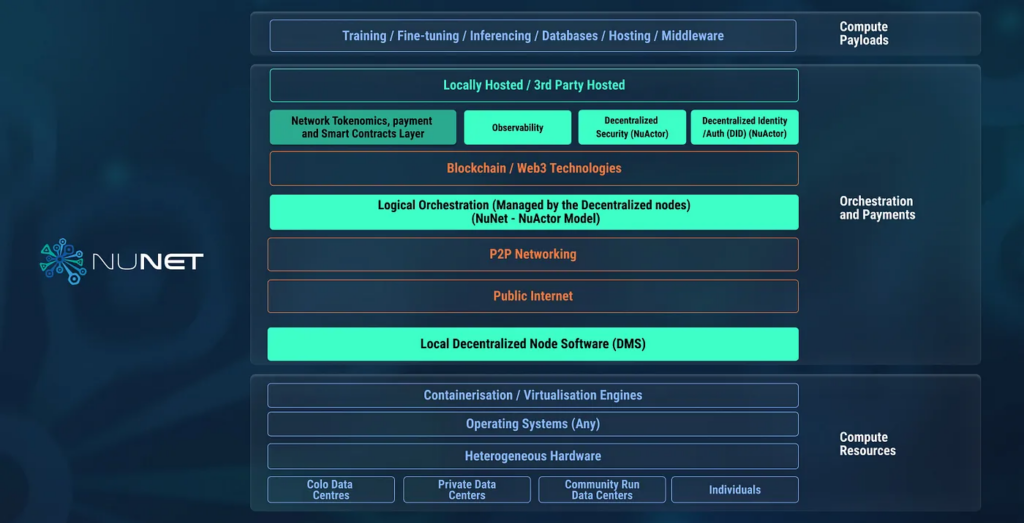

NuNet closed out 2024 with the release of Device Management Service (DMS) v0.5.0, marking a significant step in the roadmap toward a fully decentralized computing ecosystem.

This release laid the foundation for scalable, secure, and inclusive compute sharing by introducing key components: the DMS for seamless integration of devices into a decentralized network, a Logical Orchestration Layer enabling flexible and dynamic task distribution across networks, advanced security features implementing decentralized identifiers (DIDs) and zero-trust interactions, and an Observability Framework providing real-time insights and debugging tools for decentralized workflows.